The information in this chapter is not a complete guide to shading languages; our goal here is to cover the general concepts that you will need in order to understand and use the Open Inventor shader classes.

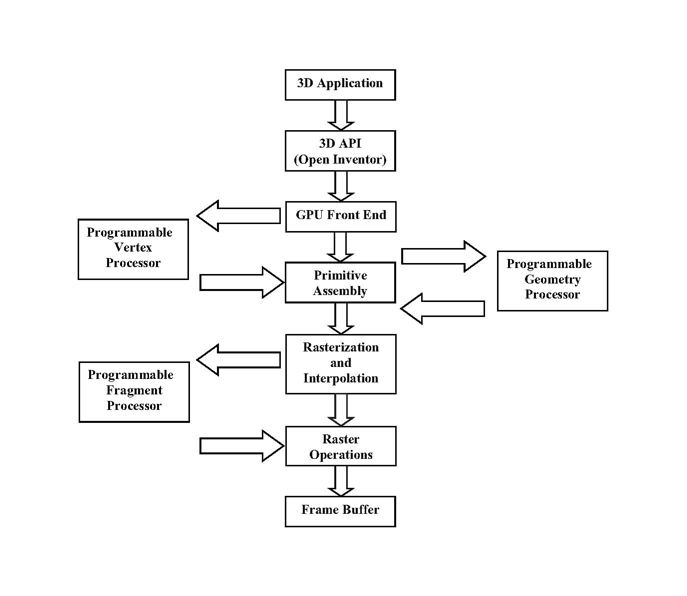

The recent trend in graphics hardware has been to replace fixed functionality with programmability of two units of the GPU. These two units are the vertex processor unit and the fragment processor unit. On very recent graphics hardware, a new programmable unit is available, the geometry processor unit.

A program at the vertex level is called a vertex program or vertex shader and runs on the vertex processor unit. A program at the geometry level is called a geometry program or a geometry shader and runs on the geometry processor unit. Finally, a program at the pixel level is called a fragment program, fragment shader, or pixel shader and runs on the fragment processor unit.

Vertex processing involves the operations that occur at each vertex whereas fragment processing concerns operations that occur at each fragment.

Figure 21.1, “ Programmable graphics pipeline”Figure 21.1, “ Programmable graphics pipeline”illus trates th e vertex processing, geometry processing, a nd fragment processing stages in the pipeline of a programmable GPU.

The language code that is intended for execution on one of the OpenGL programmable processors is called a shader. The shading languages used to write shaders fall into two groups: assembly languages that allow shaders to be written directly in a hardware-executable form and high-level languages which are aimed at a much larger group of developers.

Here is a quick description of supported GLSL, the only supported shading languages in Open Inventor

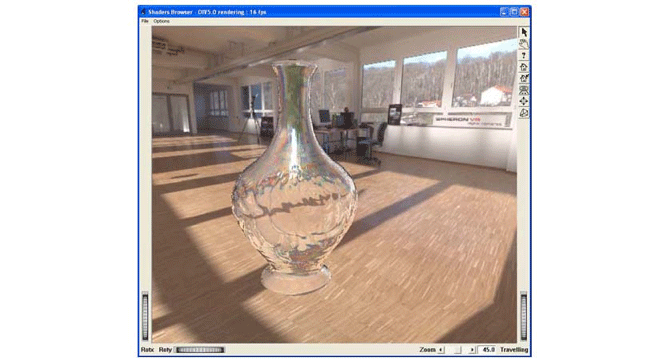

Programmable graphics hardware offers new capabilities in terms of rendering and particularly in terms of “realistic” real-time rendering. It offers the ability to create natural effects such as simulated caustics in water, smoke, fire, and so on.

A wide range of materials (translucent or opaque) can be rendered more accurately by applying complex lighting and surface reflection models, high-dynamic-range environment lighting, separable bi-directional reflectance distribution function (BRDF) techniques, and many others.

Until now, in order to animate your 3D scene, the bottleneck was the data transfer from the main memory to the graphics board via the AGP bus (and soon via the PCI express bus). Now, with programmable GPUs, animations can be directly coded within the vertex processor unit, avoiding this time-consuming transfer.

High-level shading languages are similar to a classical sequential programming language like “C” and low-level shading languages are similar to assembly languages used on a CPU. A vertex shader is a main function (which could call other functions) which takes at least vertex input parameters and returns vertex parameters modified by the program. A geometry shader is a main function which takes a set of vertices as input from the vertex shader output then generates a geometric primitives as set of triangles, lines or points, In the same way, a fragment shader is a main function which takes at least fragment input parameters and returns a final color and depth.

Vertex shaders affect only a series of vertices and thus can only alter vertex properties like position, color, texture coordinate etc. The vertices computed by vertex shaders are typically passed to geometry shaders.

Geometry shaders can add to and remove vertices from a mesh. Geometry shaders can be used to procedurally generate geometry or to add volumetric detail to existing meshes that would be too costly to process on the CPU.

Fragment shaders calculate the color value of individual pixels when the polygons produced by the vertex and geometry shaders are rasterized. They are typically used for scene lighting and related effects such as bump mapping and color toning.

![[Warning]](../images/warning.jpg) | |

It is important to keep in mind that a vertex shader is called for each vertex, and a fragment shader is called for each pixel. That is why, for instance, a per-pixel lighting fragment shader is less efficient in terms of performance (but gives higher quality results) than a classical per-vertex lighting vertex shader; there are generally fewer vertices than pixels to display. |

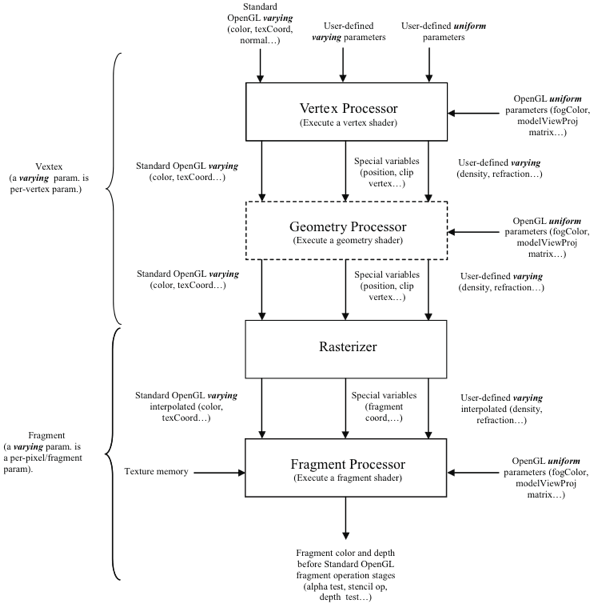

As with classical programming languages, such as “C”, vertex, geometry and fragment shaders accept input data and return the results as output.

All shading languages respect the logical diagram of the Figure 21.2, “ Vertex, geometry and fragment processor inputs/outputs”, even if some differences, detailed below, exist between the different languages.

Uniform means, in the case of a vertex/geometry shader, a value that does not change at each vertex, and, in the case of fragment shader, a value that does not change at each fragment.

OpenGL uniform parameters: With GLSL special variables are accessible within shaders. For example, GLSL: gl_ModelViewMatrix is the current Model x View matrix and gl_FogColor is the current fog color.

User-defined uniform parameters:

Regardless of the selected shading language, these parameters should be provided by the application.

Varying means, in the case of a vertex/geometry shader, a value which changes at each vertex, and, in the case of a fragment shader, a value which changes at each fragment.

OpenGL varying parameters:

Regardless of the selected shading language, these parameters are implicitly accessible by vertex/geometry/fragment shaders. These parameters correspond to standard OpenGL calls in your application (for instance glColor, glNormal,…). With GLSL special variables are accessible within shaders. For example gl_Color is the vertex’s color and gl_normal is the vertex’s normal.

User-defined varying parameters:

Regardless of the selected shading language, these generic parameters should be provided by the application at each vertex in the same way normal or texture coordinates are supplied.

In order to apply texture within fragment shaders (and also within vertex shaders with GLSL only), access to the texture memory is offered with all shading languages.

With GLSL a set of basic types called samplers should be used. Samplers are a special type of opaque variables used to access a particular texture map named sampler within vertex (only with GLSL) and fragment shaders. Samplers should be set by the application as a uniform parameter to the corresponding texture unit with GLSL

![[Warning]](../images/warning.jpg) | |

Regardless of the shading language used, the standard OpenGL pipeline is modified when you load a vertex and/or fragment shader. Developers need to be aware of this behavior in order not to get unexpected results. |

Vertex shaders replace the following parts of the OpenGL graphics pipeline:

Vertex transformation

Normal transformation, normalization, and rescaling

Lighting

Color material application

Clamping of colors

Texture coordinate generation

Texture coordinate transformation

But do not replace:

Perspective divide and viewport mapping

Frustum and user clipping

Backface culling

Primitive assembly

Two-sided lighting selection

Polygon offset

Polygon mode

Fragment shaders replace the following parts of the OpenGL graphics pipeline:

Operations on interpolated values

Pixel zoom

Texture access

Scale and bias

Texture application

Color table lookup

Fog convolution

Color sum

Color matrix

But do not replace:

Shading model

Histogram

Coverage

Minmax

Pixel ownership test

Pixel packing and unpacking

Scissor

Stipple

Alpha test

Depth test

Stencil test

Alpha blending

Logical ops

Dithering

Plane masking

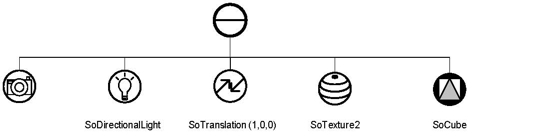

This implies that if you have the following very simple scene graph:

You will get a textured cube centered in 1,0,0.

Now imagine you have a way, described in the next paragraph, to apply a vertex shader and a fragment shader to this cube. You write a basic vertex shader which takes as implicit parameter the vertex position and returns it, and a basic fragment shader which takes as implicit parameter the fragment color and returns it.

If you expect to still get a textured cube, you are mistaken! In the best case, you will get a flat white cube!This is because all transformations, texture application, lighting computation…, are replaced by the vertex/fragment shaders. These shaders do not contain all these operations by default and you must write them yourself.

Never fear, all shading languages offer high-level functions to easily compute these in your program.