These have already been mentioned in Section 1.1.2, “VolumeViz Features” under Volume Properties. In this section we provide more details about the following volume characteristics and how to set and/or query them.

Volume characteristics

The dimensions of the volume (number of voxels on each axis) are normally determined by the volume reader from information in the data file(s), the number of images in the stack, etc. (When you set the data field directly you specify the volume dimensions.) You can also query the volume dimensions using the data field. For example:

C++SbVec3i32 voldim = pVolumeData->data.getSize();

.NETSbVec3i32 voldim = VolumeData.data.GetSize();

JavaSbVec3i32 voldim = volumeData.data.getSize();The geometric extent of the volume in 3D is initially determined by the volume reader but can also be set using the extent field. The volume extent is the bounding box of the volume in world coordinates. Often the volume extent in 3D is set equal to the dimensions of the volume or to values that are proportional to the volume dimensions. For example, -1 to 1 on the longest axis of the volume and proportional values for the other axes puts the origin (0,0,0) at the center of the volume, simplifying rotations. However the volume extent can be any range, for example the range of line numbers in a seismic survey. The volume extent indirectly specifies the voxel size/spacing (see next bullet point). You can query the volume extent using the extent field. For example:

C++SbBox3f volext = pVolumeData->extent.getValue();

.NETSbBox3f volext = VolumeData.extent.Value();

JavaSbBox3f volext = volumeData.extent.getValue();

![[Important]](../../images/important.jpg)

The volume extent and orientation (like geometry) can be modified by transform nodes in the scene graph and this in turn modifies the appearance of volume rendering nodes (SoVolumeRender( C++ | Java | .NET ), SoOrthoSlice( C++ | Java | .NET ), etc). However the same transformation must be applied to the volume data node and all rendering nodes associated with that volume. So effectively any transformation nodes that affect the volume must be placed before the volume data node.

If the volume data is uniformly sampled, the voxel size is the volume extent divided by the volume dimensions. The voxel size is often different for at least one axis, although still uniform along each axis. This is typical of medical scanner volumes which often have a larger spacing along the volume Z axis. The volume dimensions are fixed, but the volume extent can be specified by the application and even changed at runtime, for example if the initial information supplied by the reader is not correct.

VolumeViz is not limited to uniformly spaced voxels. VolumeViz also supports “rectilinear” coordinates where the non-uniform voxel positions are explicitly given for each axis. This information is supplied by the volume reader and can be queried using the SoVolumeData( C++ | Java | .NET ) methods getCoordinateType() and getRectilinearCoordinates().

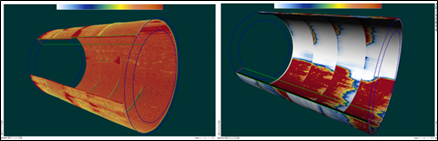

VolumeViz also supports “projection” using Open Inventor’s SoProjection node (with some limitations). This allows visually correct rendering of volumes defined in, for example, cylindrical coordinates or geographic coordinates.

VolumeViz supports scalar volumes containing signed and unsigned integer values (byte, short, int) or floating point values. VolumeViz also supports RGBA volumes containing 32 bit color plus alpha values. The data type is determined by the reader (or when setting the data field). You can query the data type and/or number of bytes per voxel using methods inherited from SoDataSet( C++ | Java | .NET ). For example:

C++int bytesPerVoxel = pVolumeData->getDataSize(); SoDataSet::DataType type = pVolumeData->getDataType();

.NETint bytesPerVoxel = VolumeData.GetDataSize(); SoDataSet.DataTypes type = VolumeData.GetDataType();

Javaint bytesPerVoxel = volumeData.getDataSize(); SoDataSet.DataTypes type = volumeData.getDataType();

In volumes using data types larger than byte, the actual range of data values is usually smaller than the range of the data type. The application should use an SoDataRange( C++ | Java | .NET ) node to specify the range of values that will be mapped into the transfer function. You can query the actual minimum and maximum values in the volume using the getMinMax methods. For example:

C++double minval, maxval; SbBool ok = pVolumeData->getMinMax( minval, maxval );

.NETdouble minval, maxval; bool ok = VolumeData->getMinMax( out minval, out maxval );

Javadouble minmax[] = volumeData.getDoubleMinMax();

However note that if the volume reader does not respond to the getMinMax() query, these methods force VolumeViz to load the entire data set into memory and scan through all the voxels to compute the min and max values. In an LDM format data set (for example), the min and max values are stored in the LDM header and the volume reader implements getMinMax(), so the query is very fast. This is not true for most of the other formats directly supported by VolumeViz. However if you convert your data to LDM format (as we recommend) the converter program will compute the min and max values and store them in the LDM header file.

By default, VolumeViz scales data values of all types larger than 8-bits down to 8-bit unsigned integer values on the GPU. For rendering purposes this is usually sufficient and using a small data type maximizes the amount of data that can be loaded in the available memory on the GPU. If necessary you can tell VolumeViz to use 12-bit integer values by setting the texturePrecision field on the SoVolumeData( C++ | Java | .NET ) node. Note that the 12-bit option actually uses 16-bit textures to store the data, so the memory requirement on the GPU is double in this case.

Conceptually the issue to consider is that (depending on data type and data range) a range of actual data values may be "aliased" onto each GPU value. For example, all the data values in the range 32 to 47 might end up as 32 in the GPU memory. For rendering, the main advantage of using a larger data type on the GPU is that it allows a larger, more precise, color map. You could consider this an improvement in image quality, but it's more about (possibly) being able to perceive small differences in data values that would be lost with a smaller color map. Whether this is important or not depends very much on the range of values in the data, actual accuracy of the data acquisition, and so on. In summary, the default data storage allows 256 different values and 12-bit storage allows 4096 values.

There is one special case for rendering. If you use the isosurface rendering feature (discussed in Section 1.6, “Height Field Rendering”) you may, depending on your data, get better and more accurate surfaces with 12-bit data values on the GPU. This is mainly a concern for medical and NDT data. RGBA data is also a special case. If you give VolumeViz a volume containing 32-bit RGBA values, it will store those 32-bit values on the GPU. Of course in this case there is no color map.