The current stereo model allows programmers to add support for specific stereo devices that would not be covered by the default types of stereo. Supporting a new stereo technique relies on two classes: SoStereoViewer which describes what a stereo viewer should be (all viewers are of type SoStereoViewer) and SoBaseStereo which describes any class that supports a stereo technique.

Presumably, nobody should have to write a new stereo viewer – unless a new viewer is being written that does not derive from SoWinViewer , for instance one that derives from SoWinRenderArea . Note that SoStereoViewer is a pure virtual class. The main behavior of this class is to allow the specification of a specific stereo type and to trigger the actual rendering (the viewer is the only one that knows how to render a scene). Therefore, when a redraw is needed, the stereo viewer calls the render code of the stereo object; this sets up what is needed for rendering one eye’s view, calls the method for actual rendering on the stereo viewer, sets what is needed for rendering the other eye’s view, and calls the method for actual rendering again.

C++ :

C# :

Other methods act and report on the state of the stereo viewing. This includes all methods related to adjusting the offset as well as the balance, plus the inversion of stereo. In some cases, the stereo class must make assumptions about how the stereo device interprets the data. For instance, in interleaved mode, it is assumed that the even scanlines will be used for the left eye, and odd scanlines for the right eye. If the stereo device interprets the data differently, the scene will appear in pseudo stereo, with distant points appearing closer than near points. Reversing the stereo (switching the left and right eye views) solves this problem.

C++ :

C# :

Finally, other methods act and report on the state of the viewer itself, such as accessing the camera, getting and setting the viewport and the size and position of the window.

SoBaseStereo is the base class of all stereo classes. Therefore, if it is necessary to implement support of another stereo technique, subclass this class. It allows both software and hardware OpenGL stereo to be implemented. Note that all default stereo classes assume that a valid OpenGL context is available, though this is not necessarily a requirement when creating new stereo classes.

The main method in SoBaseStereo is renderStereoView(). This method is called by the stereo viewer to ask the stereo object to start all the work needed to completely render in stereo. Therefore, the code in this method should at least call the method actualRendering() on the stereo viewer. Usually, the code in renderStereoView() follows three steps:

C++ :

C# :

Two additional methods, which may be used by the stereo viewer, report on the requirements of the stereo object during one rendering pass:

C++ :

C# :

canClearBeforeRender() is not a query from the stereo object to the stereo viewer to ask to clear the color buffer. Rather, its return value indicates whether it is okay to clear the color buffer of the stereo object. If this method returns TRUE, the stereo viewer does not necessarily need to clear the buffer. But if it returns FALSE, the viewer should avoid clearing the color buffer because the buffer contains information needed for the stereo effect. For instance in interleaved mode, after the first eye’s view is rendered, the color buffer already contains every-other-scanline worth of information, thanks to the stencil buffer.

requireHardware() is the way for the stereo object to let the stereo viewer know that the selected stereo type requires OpenGL stereo to be activated. If it does, this means the viewer must try to find a pixel format that contains a stereo buffer.

Finally, a method is provided for clearing any state or resource used by the stereo object when it is no longer in use (for instance when the stereo viewer is set to another stereo object).

C++ :

C# :

This example shows how to render stereo UserGuide_Images in an offscreen buffer. It makes use of a custom stereo viewer (this is one exception to what is said in the section called “ SoStereoViewer”) and a simple stereo object. The offscreen rendering is done by the stereo viewer. This stereo viewer can’t be used with stereo classes that assume the presence of a valid OpenGL context. The source code is available in:$OIVHOME/src/Inventor/examples/Features/OffscreenStereo/OffscreenStereo.cxx.

This example shows how to render stereo UserGuide_Images in an offscreen buffer. It makes use of a custom stereo viewer (this is one exception to what is said in the section called “ SoStereoViewer”) and a simple stereo object. The offscreen rendering is done by the stereo viewer. This stereo viewer can’t be used with stereo classes that assume the presence of a valid OpenGL context. The source code is available in:$OIVHOME/src/Inventor/examples/Features/OffscreenStereo/OffscreenStereo.cxx.

Example : Render stereo UserGuide_Images in an offscreen buffer

C++ :

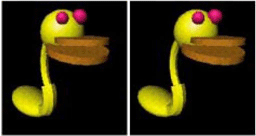

The program then needs to create a scene graph, provide an output method to deal with each rendered image, and an OffscreenStereo object containing the scene graph. On this object, call the method renderSceneInStereo(). Although this example does not deal with a specific stereo device, it makes use of the Open Inventor stereo model by taking advantage of the view volume reorientation (as described in Camera Projection and View Volume) to deliver two UserGuide_Images with high geometric consistence.

Note that the class SimpleStereo can be applied to any viewer, though it would act almost like monoscopic mode: as canClearBeforeRender() returns TRUE, and as no other specific code activates a particular device driver, the scene would be rendered twice on whatever OpenGL buffer is set at the moment. The first render would be overwritten by the second render, and the scene would appear as seen by one eye (with the view volume adapted to that eye).